Abstract

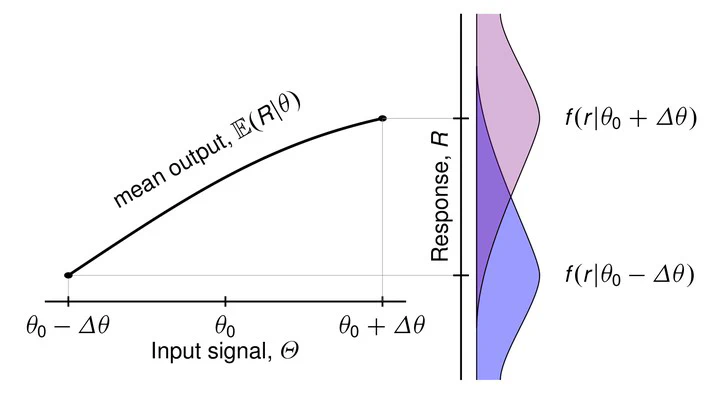

We derive an approximate expression for mutual information in a broad class of discrete-time stationary channels with continuous input, under the constraint of vanishing input amplitude or power. The approximation describes the input by its covariance matrix, while the channel properties are described by the Fisher information matrix. This separation of input and channel properties allows us to analyze the optimality conditions in a convenient way. We show that input correlations in memoryless channels do not affect channel capacity since their effect decreases fast with vanishing input amplitude or power. On the other hand, for channels with memory, properly matching the input covariances to the dependence structure of the noise may lead to almost noiseless information transfer, even for intermediate values of the noise correlations. Since many model systems described in mathematical neuroscience and biophysics operate in the high-noise regime and weak-signal conditions, we believe, that the described results are of potential interest also to researchers in these areas.